Hey folks! So we are going to use an LLM locally to answer questions based on a given csv dataset.

We will be using a local, open source LLM “Llama2” through Ollama as then we don’t have to setup API keys and it’s completely free. However, you will have to make sure your device will have the necessary specifications to be able to run the model. For smallest models of 7B parameters, you need to have at least 8GB of RAM.

Ollama isn’t available for Windows, but you can install it with the following command if you have WSL2 (this applies to Linux too):

curl https://ollama.ai/install.sh | shOr if you have a Mac:

https://ollama.ai/download/Ollama-darwin.zipOnce installed run the following command to start the ollama server:

ollama serveBy default, this server will run at http://localhost:11434.

You can also run a model in the command prompt and interact directly with it, for example:

Now let’s have a very small CSV dataset for demonstration purposes. You can use your own CSV file.

Copy paste the following data and save it as Marks.csv in your workspace directory.

Student_Name,Subject,Marks

Jack,Math,90

John,Math,60

Mary,Math,70

Peter,Math,80Inside the same directory create two files called streamlitui.py and langchainhelper.py.

Before we proceed, allow me to introduce you to LangChain.

LangChain is a framework which helps you to easily develop LLM applications with lesser lines of code. It comes with a set of libraries (available both in Python and JavaScript) which gives you the ability to implement agents (to do a set of tasks), chains, cool features like providing context and memory for LLMs, a lot of integrations with different providers like OpenAI, Ollama, tools and many more.

Vector databases empower LLMs through fast and efficient storage and retrieval of data in the format of vectors: high dimensional numerical representations of the data instead of the traditional rows and columns, and help provide LLMs with relevant context information by data retrieval and similarity search. For example, similar texts such as “happy” and “joyful” can have closer vector distances such as:

- "happy": [0.2, 0.8, 0.5]

- "joyful": [0.19, 0.82, 0.49]

These vector data are also commonly known as embeddings. You can store any type of data - videos, images, text as embeddings. Through these vector databases, LLMs can get over their limitation of not having the latest information or accessing user-specific data.

Last introduction, I promise:

Combining an LLM with a Vector Database helps incorporate external knowledge to the LLM. This, coupled with some additional components for loading, splitting, storing, adheres to the principle of "RAG" - where you retrieve external data and pass it to the model's prompt as context. This is different from fine-tuning.

For our example let’s use the open sourced popular vector db called ChromaDB. Some other popular open source vector databases are Marqo, Supabase, etc. You can get the list from the LangChain documentation.

Let's Code

Here is a diagram to help you get an idea of the flow:

Install the following libraries:

pip install langchain chromadbNow add the following code to langchain_helper.py:

from langchain.llms import Ollama

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain, RetrievalQA

from langchain.document_loaders import CSVLoader

from langchain.vectorstores import Chroma

from langchain.embeddings import OllamaEmbeddings

def get_insights(question):

# Load and process the CSV data

loader = CSVLoader("Marks.csv")

documents = loader.load()

# Create embeddings

embeddings = OllamaEmbeddings(model="llama2")

chroma_db = Chroma.from_documents(

documents, embeddings, persist_directory="./chroma_db"

)

chroma_db.persist()

llm = Ollama(model="llama2")

prompt_template = PromptTemplate(

input_variables=["context"],

template="Given this context: {context}, please directly answer the question: {question}.",

)

# Set up the question-answering chain

qa_chain = RetrievalQA.from_chain_type(

llm,

retriever=chroma_db.as_retriever(),

chain_type_kwargs={"prompt": prompt_template},

)

print(chroma_db.as_retriever())

result = qa_chain({"query": question})

return resultCode Explanation

The CSVLoader loads a single row per document.

[Document(page_content='Student_Name: Jack\nSubject: Math\nMarks: 90', metadata={'source': 'Marks.csv', 'row': 0}), Document(page_content='Student_Name: John\nSubject: Math\nMarks: 60', metadata={'source': 'Marks.csv', 'row': 1}), Document(page_content='Student_Name: Mary\nSubject: Math\nMarks: 70', metadata={'source': 'Marks.csv', 'row': 2}), Document(page_content='Student_Name: Peter\nSubject: Math\nMarks: 80', metadata={'source': 'Marks.csv', 'row': 3})]Also note that you are not limited to CSVs, you can implement web scraping, use pdfs, emails, excel sheets, and many more.

Then we create the embeddings with the embedding function provided by Ollama by passing the model name we want to use. It accepts other parameters as well such as embed instructions, number of gpus to use, stop token, topk, etc. Then we load the document data and the embeddings into Chroma DB. The persistdirectory parameter is optional - this will create a folder called chroma_db and a file called chroma.sqlite3 will be generated. Even if you don’t pass this parameter it will automatically save to disk.

If you are curious, you can look at what the embeddings look like by adding the following code;

embeddings = OllamaEmbeddings(model="llama2")

query_result = embeddings.embed_query(documents)

print(query_result[:5])[-1.4050122499465942, -0.4291639029979706, -0.603569507598877, 1.2300854921340942, 0.9346778392791748]If you are also curious about the generated sqlite3 file, you can navigate inside the chroma_db folder from the terminal and checkout the tables and the data inside:

LangChain also provides us with the ability to craft prompt templates. We are passing the context and the question variables to the prompt, and the prompt is passed to the RetrievalQA, which is a chain for question-answering against an index. The RetrievalQA seems to internally populate the context after retrieving from the vector store. By default the chain uses “stuff” as the chain type - that is, all the retrieved documents from the vector store are passed into the prompt. This won’t be suitable for large data, so LangChain provides other chain types such as map_reduce, etc.

Now let’s install streamlit for the user interface so we can pass user questions to the function we created before.

pip install streamlitPaste the code below into streamlit_ui.py:

import streamlit as st

import langchain_helper as lch

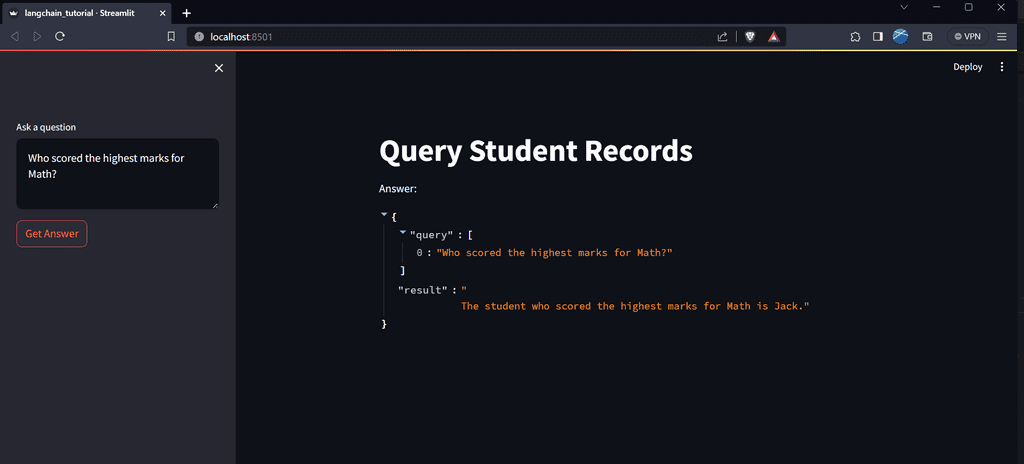

st.title("Query Student Records")

question = st.sidebar.text_area(label=f"Ask a question")

if st.sidebar.button("Get Answer"):

response = lch.get_insights(question)

st.write("Answer:", response)Now run:

streamlit run streamlit_ui.pyType your question and check it out!

You will notice that in the terminal you ran ollama serve, there will be several api calls to /api/embeddings when the embeddings are created.

Limitations

For better answers, a better crafted prompt should be used. You can find such prompt templates in the LangChain hub: https://smith.langchain.com/hub?organizationId=d9ea56f8-ed98-5632-b376-dff134eb0275

LLMs are generally bad at calculations. You could combine it with an agent to use a math tool.

You can also implement chat history and provide memory by using LangChain methods:

https://python.langchain.com/docs/modules/memory/

https://python.langchain.com/docs/use_cases/question_answering/chat_history